The Skeleton-based Hand Gesture Recognition in the Wild track has been designed for benchmarking methods and algorithms to detect recognize hand gesture in a continuous stream of captured hand joints. It features a benchmark including training sequences and test sequences with significant gestures interleaved with non significant hand movements. Gestures belong to a heterogeneous dictionary including static gestures, coarse dynamic gestures characterized by a global trajectory and fine dynamic gestures characterized by fingers' articulaton. The goal is to detect with an online algorithms the gestures and correctly classify them, avoiding false positives. The SHREC contest evaluated the performances of different methods and resulted in a publication now available at the web site https://arxiv.org/abs/2106.10980 .

DATASET, annotation revision and call for novel submissions

Dataset

The dataset is made of 180 sequences of gestures. Each sequence has been planned to include from 3 to 5 gestures padded by semi-random hand movements labeled as non-gesture. The original dictionary is made of 18 gestures divded in static gestures, characterized by a static pose of the hand, and dynamic gestures, characterized by the trajectory of the hand and its joints. The dataset is split in a training set with 108 sequences including originally 24 examples per gesture class and a text sequence including about 16 examples per class. However, we discovered some smal bugs in the annotation provided for the contest and we propose, therefore an update of the dataset (annotation only). In particular, we removed a gesture class (POINTING) that had relevant issues related to potential similarity with subsetes of other gesticulations and was not correctly annotated in some cases. And we checked and revised the annotations of all the other gestures, so that the limits of the gestures have sometimes been changed and a few wrong executions been detected.The list of the gestures in the updated dictionary is the following.

- Static Gestures

- - ONE

- - TWO

- - THREE

- - FOUR

- - OK

- - MENU

- ynamic Gestures

- - LEFT

- - RIGHT

- - CIRCLE

- - V

- - CROSS

- Fine dynamic Gestures

- - GRAB

- - PINCH

- - TAP

- - DENY

- - KNOB

- - EXPAND

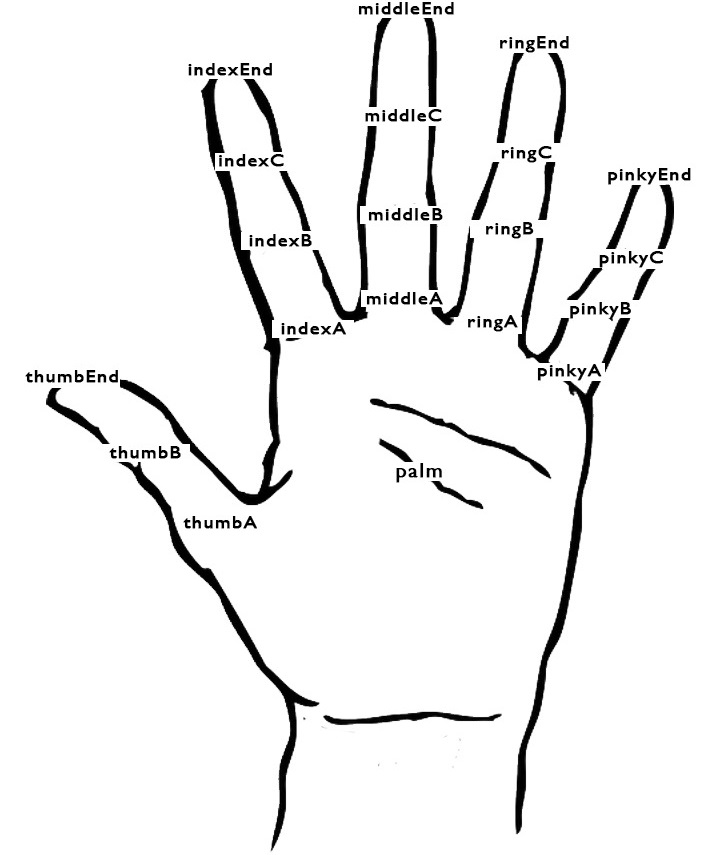

The sensor used to capture the trajectory is a LeapMotion recording at 50fps. Each file in the dataset represents one of the sequences and each row contains the data of the hand's joints captured in a single frame by the sensor. All data in a row is separated by a semicolon. Each line includes the position and rotation values for the hand palm and all the finger joints.

The line index (starting from 1) coresponds to the timestamp of the corresponding frame.

The structure of a row is summarized in the following scheme:

palmpos(x;y;z); palmquat(x,y,z,w); thumbApos(x;y;z); thumbAquat(x;y;z;w); thumBpos(x;y;z); thumbBquat(x;y;z;w); thumbEndpos(x;y;z); thumbEndquat(x;y;z;w); indexApos(x;y;z); indexAquat(x;y;z;w); indexBpos(x;y;z); indexBquat(x;y;z;w); indexCpos(x;y;z); indexCquat(x;y;z;w); indexEndpos(x;y;z); indexEndquat(x;y;z;w); middleApos(x;y;z); middleAquat(x;y;z;w); middleBpos(x;y;z); middleBquat(x;y;z;w); middleCpos(x;y;z); middleCquat(x;y;z;w); middleEndpos(x;y;z); middleEndquat(x;y;z;w); ringApos(x;y;z); ringAquat(x;y;z;w); ringBpos(x;y;z); ringBquat(x;y;z;w); ringCpos(x;y;z); ringCquat(x;y;z;w); ringEndpos(x;y;z); ringEndquat(x;y;z;w); pinkyApos(x;y;z); pinkyAquat(x;y;z;w); pinkyBpos(x;y;z); pinkyBquat(x;y;z;w); pinkyCpos(x;y;z); pinkyCquat(x;y;z;w); pinkyEndpos(x;y;z); pinkyEndquat(x;y;z;w)

where the joint positions corresponds to those reported in the image above.

Each joint is therefore characterized by 7 floats, three for position and four for the quaternion, the sequence starts from the palm, then the thumb with three joints ending with the tip and then the other four fingers with four joints each ending with the tip.

The dataset is split in two parts, a training set, including 108 sequences with approximately 24 gestures per class (the exact number is sometimes slightly different due to the revised annotation) and a test set, including 72 sequences with approximately 16 gestures per class.

Participants should develop methods to detect and classify gestures of the dictionary in the test sequences based on the annotated examples in the training sequences. The developed methods should process data simulating an online detection scenario or, in other words, detect and classify gestures progressively by processing trajectories from beginning to end.

The annotation/test results format should consists of a text files with a row for each sequence with the following information: sequence number (the number in the filename with the sequence in the training/test), identifed gesture label (the string in the dictionary), detected start of the gesture (frame/row number in the sequence file), detected end of the gesture (frame/row number in the sequence file).

For example, if the algorithm in the first sequence detects a PINCH and a ONE and in the second a THREE, a LEFT and a KNOB, the first two lines of the results file will be like the following ones:

Task and Evaluation

The evaluation is based on the number of correct gestures detected, the false positive rate and the accuracy of the start and end frame detected.