SHREC 2022 track on

online detection of heterogeneous gestures

Motivation

Following the interesting results of the previous edition (Shrec'21 Track on online gesture recognition in the wild), we organize a novel edition of the contest, still aimed at benchmarking methods to detect and classify gestures from 3D trajectories of the fingers' joints (requiring 3D geometry processing and being therefore interesting for the SHREC community). As the most recent generation of Head-Mounted Displays for mixed reality (Hololens2, Oculus Quest, VIVE) provide accurate finger tracking, gestures can be exploited to create novel interaction paradigms for immersive VR and XR. While we tried to maintain continuity with respect to the 2021 edition, we made some important updates to the gestures' dictionary and to the recognition task and we will provide a novel dataset. In the novel dictionary, we removed gesture classes creating ambiguities (POINTING, EXPAND) and the evaluation will not only consider the correct gesture detection, but also the latency in the recognition. The frame rate of the acquisition is lower and not perfectly regular, and the timestamps of the captured frames are provided with the data.

Dataset

The dataset includes 288 sequences including a variable number of gestures, divided in a training set, with provided annotations, and a test set where the gestures have to be found, in accord to a specific protocol detailed in the following.

Click here to download the training data (with annotations)Click here to download the test data (without annotations)

Fingers data are captured with a Hololens2 device simulating mixed reality interaction. Time sequences are saved as text files where each row represents the data of a specific time frame with the coordinates of 26 joints. Each joint is therefore characterized by 3 floats (x,y,x position), therefore each row is encoded as:

Frame Index(integer); Time_stamp(float); Joint1_x (float); Joint1_y; Joint1_z; Joint2_x; Joint2_y; Joint2_z; .....

The joints' sequence is described here (click for description) and can also be find in the MRTK documentation available at this link

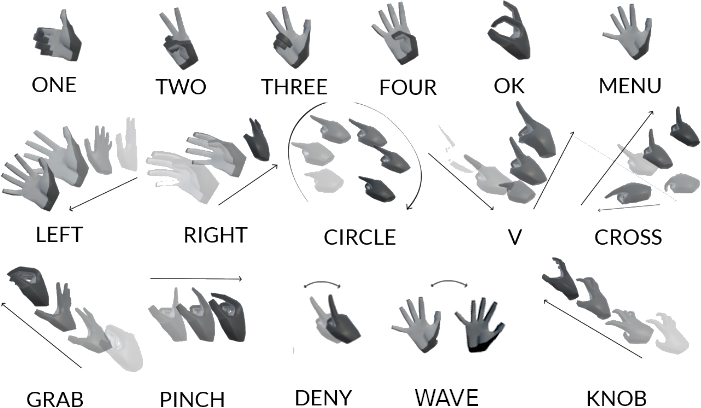

The gesture dictionary is similar to the SHREC2021 one, but with a few changes. The current dictionary is composed by 16 gestures divided in 4 categories: static characterized by a pose kept fixed (for at least 0.5 sec), dynamic, characterized by a single trajectory of the hand, fine-grained dynamics, characterized by fingers'articulation, dynamic-periodic, where the same fingers'motion pattern is repeated more times. The description of the gestures is reported in this table.

- Static Gestures

- - ONE

- - TWO

- - THREE

- - FOUR

- - OK

- - MENU

- Dynamic Gestures

- - LEFT

- - RIGHT

- - CIRCLE

- - V

- - CROSS

- Fine-grained dynamic Gestures

- - GRAB

- - PINCH

- Dynamic-periodic Gestures

- - DENY

- - WAVE

- - KNOB

Training sequences are distributed together with an annotation file, annotations.txt . It is a text file with a line corresponding to each sequence, and where two integer numbers are associated to each gesture: gesture execution start (frame index - annotated in post-processing), gesture execution end (frame index - annotated in post-processing). For example, if the first sequence features a PINCH and a ONE and the second a THREE, a LEFT and a KNOB, the first two lines of the annotation file may be like the following ones:

- 1;PINCH;10;30;ONE;85;13;

- 2;THREE;18;75;38;LEFT;111;183;131;KNOB;222;298;318;

- ....

Task and evaluation

Participants should develop methods to detect and classify gestures of the dictionary in the test sequences based on the annotated examples in the training sequences. The developed methods should process data simulating an online detection scenario or, in other words, detect and classify gestures progressively by processing trajectories from beginning to end. The format required for the submission should consist of a text file with a row corresponding to each sequence with the following information: sequence number (the number in the filename with the sequence in the training/test) and, for each identified gesture, a text string and three numbers separated by a semicolon. The string must be the gesture label (the string in the dictionary). The three numbers must be the frame number (first column in the sequence file) of the detected gesture start, the frame number (first column in the sequence file) of the detected gesture end (first column in the sequence file), the frame number (first column in the sequence file) of the last frame used by the algorithm for the gesture start prediction. For example, if the algorithm in the first sequence detects a PINCH and a ONE and in the second a THREE, a LEFT, and a KNOB, the first two lines of the results file will be like the following ones:

- 1;PINCH;12;54;32;ONE;82;138;103;

- 2;THREE;18;75;38;LEFT;111;183;131;KNOB;222;298;318;

The evaluation is based on the number of correct gestures detected, the false positive rate, and the detection latency. The Jaccard Index of the continuous annotation of the frame sequence will be also evaluated. A gesture will be considered correctly detected it is continuously detected with the same label in a time window with an intersection with the ground truth annotation larger of more than 50% of its length. The detection latency will be estimated as the difference between the actual gesture start and the reported timestamp of the last frame used for the prediction.

Registration and submission guidelines

Participants should register by sending an e-mail to andrea.giachetti(at)univr.it within the deadline reported below. Each registered group should then complete the submission within the strict deadline of February 28, by sending an email with attached files or including links for downloading the files. The submission must include up to three text files with results formatted as described above. Results can be obtained with different algorithms or parameters settings. Submission should preferably include executable code and instructions to reproduce the results. Each group must also submit within the same strict deadline a 1-page latex description of the method used, including all the important implementation details and reporting the computation time for a single frame classification. Feedback on the evaluation will be provided to the participants as soon as possible to prepare the draft paper with the outcomes.

IMPORTANT DATES

- 28/1/2022 Dataset released

- 11/2/2022 Registration deadline

- 28/2/2022 Results'submission deadline

- 15/3/2022 Draft paper with the track outcomes submitted to Computer and Graphics

Organizers

Organizing team

- Marco Emporio - VIPS lab, University of Verona

- Anton Pirtac - VIPS lab, University of Verona

- Ariel Caputo - VIPS lab, University of Verona

- Marco Cristani - VIPS lab, University of Verona

- Andrea Giachetti - VIPS lab, University of Verona

Powered by w3.css